It’s easy to buy into the hype surrounding ChatGPT for customer service. The potential impact on customer service interactions is considerable. One McKinsey & Company report predicts that this and other forms of generative AI will add more than $4 trillion to the global economy every year.

The same report predicts that the lion’s share (75%) of this stimulus will be distributed across four key areas, customer operations among them. But what does using ChatGPT for customer service look like in practice? Can it really improve customer satisfaction?

And what about the lingering concerns surrounding large language models (e.g., GPT-4) and generative AI (e.g., ChatGPT)? Many companies, such as Apple and Samsung, have already restricted employee use of these tools to prevent data leaks. Then there are the ghosts lurking about.

Let’s parse the topic in detail.

4 possible use cases of ChatGPT for customer service

To understand potential use cases for the customer service industry, it helps to be clear about what ChatGPT actually is. It’s not a tool that customer service leaders can use to directly automate interactions with customers, or to replace the customer service representative.

Even if it were a set-it-and-forget-it robot, that would be a risky endeavor, at least according to these caveats described on the ChatGPT homepage:

“ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging, as: (1) during RL (reinforcement learning) training, there’s currently no source of truth; (2) training the model to be more cautious causes it to decline questions that it can answer correctly; and (3) supervised training misleads the model because the ideal answer depends on what the model knows, rather than what the human demonstrator knows.”

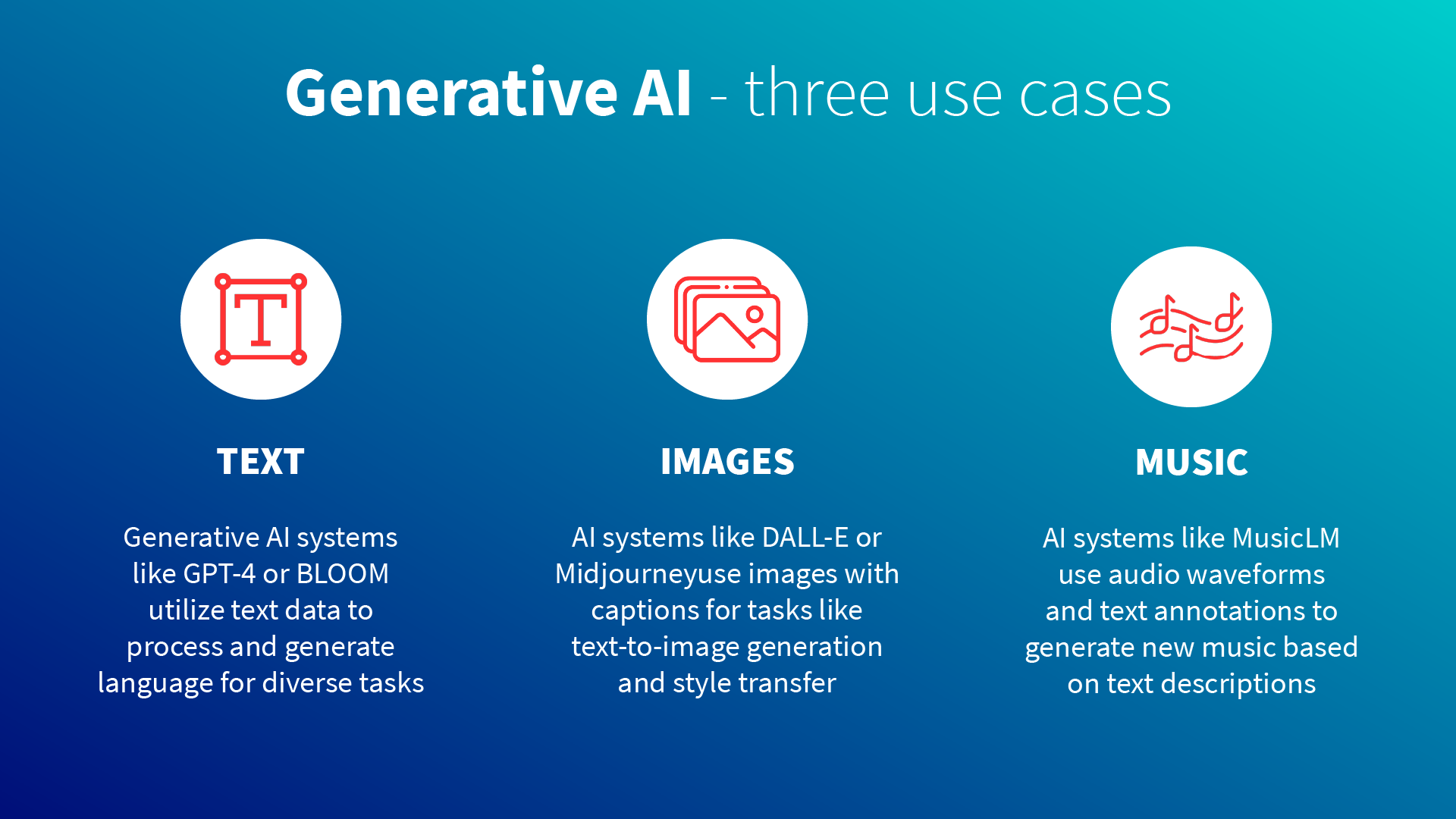

Which brings us to our first point: ChatGPT is a chatbot that operates on top of a language learning model (LLM). In customer service, you could theoretically use the chatbot to generate personalized responses to customer inquiries, summarize cases for customer support agents, etc. As you’ll see, that approach is not without its risks.

You could also pay to train an LLM on your content (knowledge, documentation, etc.) for the purposes of a customer-facing chatbot. That doesn’t have to be the OpenAI LLM associated with ChatGPT. Salesforce, Microsoft, and many others are all rolling out their own models, which at least in the meantime they’re using together with OpenAI LLM.

Either way, here are some of the more viable customer service use cases for generative AI.

1. Self-service chatbots handling customer inquiries

Some 82% of customer service leaders expect generative AI to “transform self-service and chatbots.” That’s because generative AI enables chatbots to conduct human-like customer interactions, produce natural responses to customer inquiries, and continually learn and improve.

Of the examples of this you’ll currently encounter, most are chatbots running on top of an LLM of some kind, one that’s connected to and training on a company’s existing knowledge base. Which is to say, ChatGPT itself won’t be your customer service chatbot.

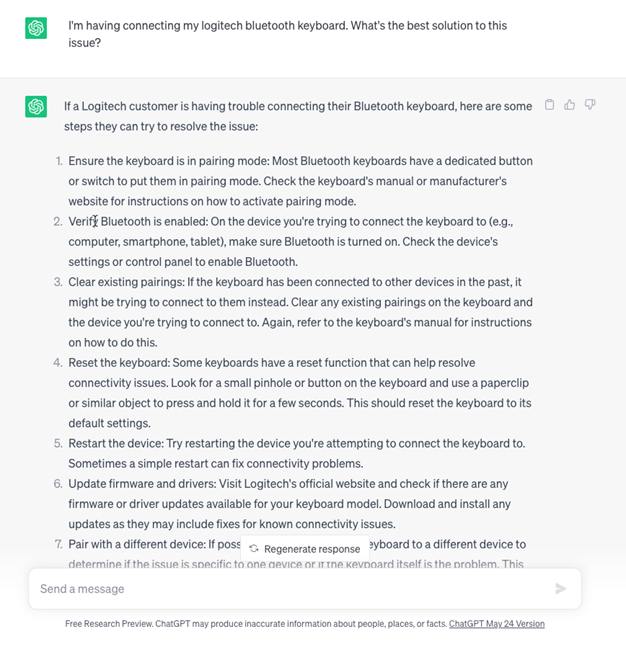

Using it as such would raise some concerns. Take this example prompt, depicted in the screenshot below:

Those seem like viable solutions to the question asked. But where does the information come from? Has it been verified? Is it trustworthy? The same guidelines that make for good chatbot experiences apply to generative AI for self-service: people using the chatbot need to trust it, understand it, and be sure that it’s providing the best answer.

2. Assistance for customer service agents

Ask any customer service representative and they’ll tell you they spend too much time looking for information. You don’t have to look far for some data points supporting this reality. Herein lies one of the more promising applications of ChatGPT for support teams.

Three useful prompts come to mind:

“Summarize this”

If an agent wanted to, they could pull up ChatGPT and ask it to automate certain tasks. “Hey, ChatGPT, summarize this customer case for me so I don’t have to waste time trying to figure it out,” for example. This could be very useful for large chunks of information that agents don’t have time to parse (LLMs are really good at summarizing a lot of text in a very short time).

Salesforce is currently piloting this functionality, having recently added summaries to its Service GPT capabilities. Similarly, Cisco is adding AI-generated chat and voice summaries to Webex.

“What’s the best solution?”

Or, “Hey ChatGPT, a customer has an issue with a bad installation. What’s the best solution?” This application could work especially well with a chatbot that’s trained on the knowledge base articles and thus capable of considering the best available solutions. Given the right resources, generative AI chatbots are very good at knowledge retrieval and recommended solutions.

Salesforce, for example,will combine their own Next Best Action AI with ChatGPT to deliver this functionality.

“Write a response to this question”

An agent could even use ChatGPT to quickly generate responses to certain questions or customer inquiries. “Hey ChatGPT, generate a response to this email from a customer: [insert customer email].” Even if the agent has to do some clean-up for the generated response, they’re likely saving valuable time. Salesforce calls these generative service responses, part of their AI for customer service automation suite.

For all of these use cases, the free ChatGPT tool can only go so far. An LLM training on the company’s actual knowledge base articles or available scripts takes it a step further, potentially increasing the quality of responses. Bucher + Suter offers a paid version that includes this depth of functionality.

While the idea of an AI-powered assistant makes sense for customer service agents, many customer service teams already embed AI-powered search experiences within the agent interface. That solution is already quite ubiquitous and viable, as are proprietary (and closed) generative AI solutions.

Again, both of these might be better choices if for no other reason than they avoid the privacy concerns associated with ChatGPT (as much as 11% of what employees paste into ChatGPT is confidential).

3. Agent training and onboarding for customer service teams

National Bureau of Economic Research published a study of more than 5,000 customer service agents. The study found that a generative AI solution helped improve productivity by 14%. And it was the new employees, as well as the underskilled, that were served best by this kind of assistance.

One can imagine the convenience and self-sufficiency that comes with having an intelligent, ever-learning, highly connected assistant at hand during training and onboarding. No need to bother a colleague for an answer or resource. No need to place a call or write an email when you’re out in the field on a call.

By extension, the faster an agent can get up to speed, the better the customer experience and—hopefully—customer satisfaction.

4. Creating content & “case swarming”

Generative AI is nothing if not prolific. These models can generate vast amounts of content far faster than any other human (or system) on the planet. Within the service and support sphere, such a tool could potentially be used to automatically generate new knowledge content.

Generative AI is also capable of connecting the dots in support of case swarming. That is, helping support teams come together to solve complex cases by identifying what worked in the past for similar cases, who was involved, etc.—all brought in from a variety of available sources.

Salesforce plans to use generative AI for both of these use cases. However, you’ll notice that, for both scenarios, some form of human checking or vetting is still required to make it work—generative AI can’t quite do it all on its own.

Beware the hallucinations (among other limitations)

We’ve already mentioned some of the limitations and concerns around generative AI in customer service. ChatGPT states plainly that its own creation might present false information as fact (or “hallucinate,” as this little foible is commonly referred to).

The same Contact Center Weekly report referenced above found that 68% of customer service leaders also worry about customer manipulation, while 62% worry about “adverse impact on the human touch” within the customer experience.

Clearly, trepidation is on par with hype here. Of the many risks and ethical considerations of generative AI, here’s a few important ones to keep in mind for your own implementations:

Security

Is generative AI a security threat? One UK spy agency certainly thinks so. The technology can certainly be exploited (see: malware designed to harvest ChatGPT credentials), especially open-source platforms that have already collected a lot of potentially sensitive data.

Data breaches

If you’re wondering why ChatGPT is so freely accessible, it’s because the model needs data continuously to improve. A lot of it. Every time you use ChatGPT, it’s using those conversations to train its generative AI models. We’ve already mentioned the propensity for people to hand these models sensitive, sometimes proprietary, data.

Cost

Because it relies on extensive infrastructure, ChatGPT costs a lot to run and maintain—for OpenAI, that is. Indeed, research published in MIT Technology Review found that training just one AI model can create massive carbon emissions.

While the cost of things like computing power and cloud resources now fall on the platform’s host, it’s unclear how these costs will be passed down to the companies using these platforms. It’s certainly something to consider.

Will ChatGPT revolutionize support?

Generative AI—be it ChatGPT or another solution—is definitely a capable technology. Its impact on customer experience and customer satisfaction could be massive. In many respects, it might very well be the revolutionary breakthrough that all the hype suggests.

As far as exceptional customer service goes, the arrival of this technology doesn’t come free of concern. Many customer support teams are still treading lightly.

Because there’s still something to be said about the human touch in customer service. As valuable as ChatGPT might be for saving time and improving productivity, there are certain aspects of service that this technology is incapable of replacing.

Moving forward, the best solutions will be those that walk this fine line between customer service automation and human touch. AI and new LLMs will undoubtedly play a role, but one more layered than the hype around ChatGPT suggests.

If you’d like to understand more about Bucher + Suter’s approach to generative AI, please contact us using the form below.